HAND, CDSA Take a Deep Dive Into the Battle Against Deepfakes

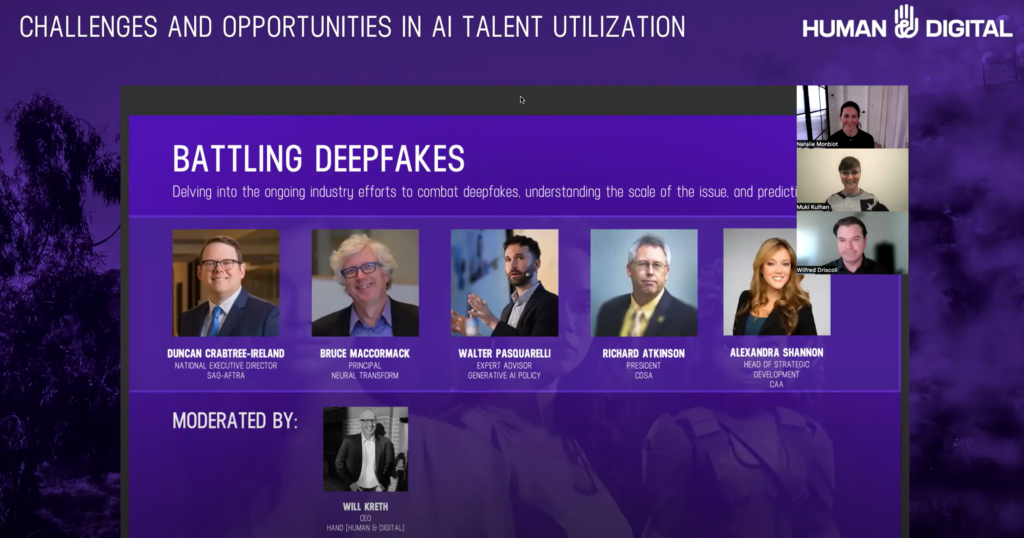

Artificial intelligence (AI) experts and media and entertainment industry executives explored the growing battle against deepfakes on Feb. 7, during the second of three panel discussions at the first webinar held by Human & Digital (HAND), “Challenges & Opportunities in AI Talent Utilization.”

During the panel session “Battling Deepfakes,” panelists delved into the ongoing industry efforts to combat deepfakes, as well as the scale of the issue.

Kicking off the discussion, moderator Will Kreth, CEO of HAND, pointed to a CNN news article that reported a finance worker at a multinational firm was “tricked into paying $25 million to fraudsters [who used] deepfake technology to pose as the company’s chief financial officer in a video conference call,” according to Hong Kong police.

“So here we are,” Kreth said. “We now live in a world where not only are deepfakes being used against celebrities and politicians, like the Taylor Swift deepfake pornography story from January, and the robocalls of President Biden, but also real millions of dollars are being stolen through the use of convincing deepfakes.”

Kreth addressed his first question to Duncan Crabtree, national director of the Screen Actors Guild (SAG)-American Federation of Television and Radio Artists (AFTRA), noting that Crabtree was “deepfaked” last fall. “From your perspective, what are the financial, legal and social implications to the entertainment industry?” Kreth asked.

Kreth addressed his first question to Duncan Crabtree, national director of the Screen Actors Guild (SAG)-American Federation of Television and Radio Artists (AFTRA), noting that Crabtree was “deepfaked” last fall. “From your perspective, what are the financial, legal and social implications to the entertainment industry?” Kreth asked.

“Someone made a deepfake of me and had a video of me out there advocating against the [SAG-AFTRA] contract,” Crabtree said. The deepfake version of him was “telling people to vote against the contract that I myself had spent a year preparing for and negotiating,” he pointed out.

He explained: “It hits differently when you see yourself out there, saying something that you really don’t believe in, using your face, your voice. And so, not that I encourage people to try it out, but I do just want to say, there is a feeling to it that’s hard to get if you haven’t experienced it. Seeing someone else sort of abuse your persona in that way. And so I think we always have to bring it back to the human impact. Whether it’s a financial impact, whether it’s an impact on our ability to trust what we see that has such huge implications.”

He made note of how important it is that “all of us in our society … know that trusted sources are really legitimate trusted sources in the way that we’ve always done that.”

Crabtree added: “In terms of those implications, obviously there are big financial implications, both to companies but also to human beings. Being deepfaked can damage their careers. It can really harm them personally in terms of the feeling of personal abuse. And, of course, there’s the really traumatic impact on our culture that can come from losing a sense of trust in people and institutions that we’ve always been able to believe in or rely on or assess.”

To help achieve that, he said: “We have some legislation that we’re trying to get enacted at the federal level … to provide new tools for that, and also [make] sure that, as deepfake technology in other forms is deployed, it is deployed in ways that actually support creative talent, that support human beings, and that make sure that when there’s a digital replica, it’s not a deepfake in the negative sense of that term.”

That can only be done with the consent of the people involved, and for purposes that help further us, culturally and socially,” he added.

Elaborating on the example given earlier about the person who was deceived in Hong Kong, Richard Atkinson, president of the Content Delivery Security Association (CDSA), said: “He transferred $25 million. Most deepfakes we’ve seen to date have been kind of pre-recorded… There’s still images or video images. What he claims is he was on a Zoom call with his CFO and multiple other employees of the company. All of them were dynamically generated in real-time and all appear to be real. So we all could be fake…. That’s really what we’re facing.”

Atkinson explained: “I think the other thing we’re facing is that a lot of Americans, maybe up to one third, don’t necessarily want the truth. They want a truth that aligns with their belief structure, which right now is a political backdrop in the country. But, regardless, I think we certainly, as a trade association for media and entertainment, support all these efforts and focus on having maximum creativity in storytelling. So we use this as a tool. There’s fact and there’s fiction. But we’ve got to make sure we don’t have fiction masquerading as fact. And that’s really what we’re talking about when we’re talking about deception.”

Atkinson explained: “I think the other thing we’re facing is that a lot of Americans, maybe up to one third, don’t necessarily want the truth. They want a truth that aligns with their belief structure, which right now is a political backdrop in the country. But, regardless, I think we certainly, as a trade association for media and entertainment, support all these efforts and focus on having maximum creativity in storytelling. So we use this as a tool. There’s fact and there’s fiction. But we’ve got to make sure we don’t have fiction masquerading as fact. And that’s really what we’re talking about when we’re talking about deception.”

Now, “we’re making great inroads,” he said, calling the Coalition for Content Provenance and Authenticity (C2PA) a “huge move forward” for the industry.

C2PA is an open technology standard that is a Joint Development Foundation project formed via an alliance by Adobe, Arm, Intel, Microsoft and Truepic. The new standard addresses the prevalence of misleading information online through the development of technical standards for certifying the source or provenance of content.

The panel’s other speakers were Alexandra (Alex) Shannon, head of strategic development at Creative Artists Agency (CAA),

Bruce MacCormack, principal of Neural Transform and co-founder of Project Origin and C2PA, and Walter Pasquarelli, expert advisor on Gen AI Policy.