Irdeto: Make Sure to Secure Your AI From Bad Actors

Advancements keep being made in artificial intelligence (AI) and machine learning (ML) that are tools for both good actors and bad actors alike, so it is more important than ever to have security in place to protect your AI models, according to Will Hickie, data science architect at Irdeto.

Speaking during the presentation “Securing Artificial Intelligence” May 12 at the annual Hollywood Innovation and Transformation Summit (HITS) Spring event, he said Irdeto has “spent years building ML models and the capabilities that they provide have significant value.”

However, “once a model is built, it’s just like any other piece of digital content; it can be copied or shared, used and abusing it becomes very easy,” he warned.

During the presentation, he explored how AI is being used across a wide variety of industries to automate human capabilities and streamline workflows, increase productivity and efficiencies, lower costs, and solve complex problems. But, from suggestions by Siri, to smart cars, to asset creation for films or games, to box office prediction models, AI applications aren’t being protected nearly enough, according to Hickie.

AI models are costly and take a lot of time to build, making them extremely valuable intellectual property to protect, he pointed out. Just as organizations protect their data and computer networks from hacking, securing AI solutions is crucial to prevent misuse or interference, according to Hickie.

AI models are costly and take a lot of time to build, making them extremely valuable intellectual property to protect, he pointed out. Just as organizations protect their data and computer networks from hacking, securing AI solutions is crucial to prevent misuse or interference, according to Hickie.

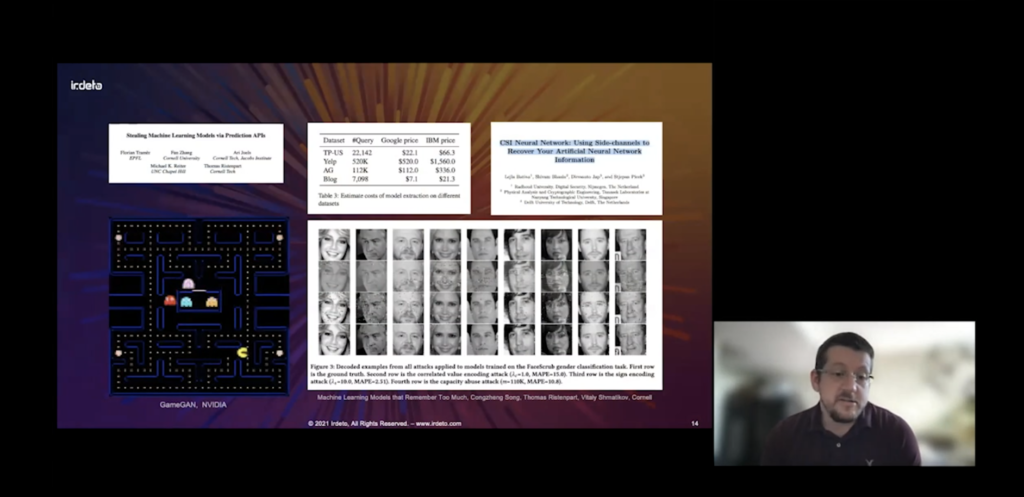

Hickie shared details on watermarking and other developments in securing AI from existing attacks (including data poisoning and network copying/cloning).

Hickie pointed out at the start of the presentation that he focuses mainly on ML and security, in particular how they can interact, noting he’s been writing software for 30 years and first started with ML in the late 1990s building video surveillance algorithms.

A Whole New World

“There’s been some changes since the 90s,” Hickie told viewers. “The tools we’re building today can make decisions and interact with the world in a way that was once the exclusive domain of people. And these tools, like all tools, can be used for good or for not good and, unlike say a hammer or a pencil, because of the power in these tools, in some sense, controlling how they’re used is going to be more important than ever before.”

This comes as “the amount of data available to people like me has just exploded exponentially,” he pointed out. At the same time, “the cost of computing has come down quite a bit,” he said.

“The computer that we all carry around in our pocket is considerably more powerful than the thing that I had starting out in the 1980s, and what this has resulted in is an increase in complexity,” he pointed out.

However, “the technologies we’re using today, in terms of the algorithms and the concepts, [are] not all that different than what people had 30, even 40, years ago – it’s just there’s more of it,” he said.

And “more data means more relationships that you can mine and more compute means more time and better abilities to mine those relationships,” he noted, adding: “In real terms, this has resulted in an increased capability for people.”

He pointed to a problem he’s been interested in for years called image inpainting, a process that started in the world of physical art conservation to reconstruct missing parts of an image, and is now being used in digital mediums also.

He pointed to a problem he’s been interested in for years called image inpainting, a process that started in the world of physical art conservation to reconstruct missing parts of an image, and is now being used in digital mediums also.

“Spatial and temporal awareness” being used today “just wasn’t possible before,” he noted, adding that, with just a short video clip, you can now tell what a person would look like from a very different perspective. “You can even slow time down if you like,” he said, explaining: “This could have some interesting applications, especially in a world increasingly dominated by video conferencing.”

Today, “if you don’t have a person to act as a model, you can now generate completely fake, photo-realistic people out of thin air using” AI, he said, pointing to examples from a website called Generated Photos. “It’s now possible to make a very lifelike and fully animated fake person,” he noted.

And “it’s not just images” that can be created this way, he said, pointing to the GTP-3 natural language processing model.

Sky High Costs in Money and Time

It is not cheap though. “Based on their price tag, they’re not really toys,” Hickie said of AI and ML models. The estimated cost, based on just the compute time, is “close to $5 million,” he said, referring to training cost.

In addition, “building the ML models used to generate those realistic faces takes over a month of computing using” an Nvidia Tesla V100 GPU, which is a “state-of-the-art” piece of hardware” for ML, he said.

“These models represent significant investments,” so it is absolutely critical to secure these models, he said.

There is, meanwhile, now an “entire industry around generating fake product reviews” and “generating fake influence,” he noted. AI tools are “making it increasingly difficult for platforms to distinguish between who is real and who isn’t, and for digital platforms, this represents a threat to their credibility and, ultimately, a threat to the marketplaces and the products that are relying on them,” he explained.

The New Arms Race

Adversarial ML can be used to figure out where to put the pattern of spray paint and black and white stickers on a stop sign to trick a sophisticated machine vision system into thinking the stop sign is a speed limit sign, Hickie noted.

“We’re in a new arms race and I don’t think anybody really knows where it’s going,” he conceded, adding: “I don’t think it’s a threat to humanity or anything like that. But it does pose some unique challenges and it’s going to force us to rethink some of the ways that we think about digital content, social media and computing in general.”

One major component of security is accessibility, he went on to say, explaining: “The more secure something is, usually the more controlled the access to that thing becomes. In the real world, systems of information are often valuable because they’re accessible so we can’t just lock them up and throw away the key. Instead, we build access control areas like log-ins and passwords and you put a lock on the door. And AI is a fantastic tool to help control all kinds of access. Most of us benefit from some form of AI-based access control with our spam filters in our email and these exist to control who has access to your inbox and, more importantly, who has access to your time.”

Those spam filters took a long time to arrive. Now, researchers are spending a lot of time on anomaly detection, according to Hickie.

Those spam filters took a long time to arrive. Now, researchers are spending a lot of time on anomaly detection, according to Hickie.

However, that is “not a be-all and end-all” solution because there is “always a way to get around things,” he conceded. But they can increase the cost of an attack and the adversary will “probably move on to an easier target,” he said.

Hickie then discussed the importance of watermarking, which lets an organization know if somebody is using its IP and often how, he said.

Advancements in ML are making things a little more complicated, he told viewers. We are, as a result, “entering an era where fingerprinting a static object is just not going to be adequate,” he warned, pointing to three specific challenges to watermarking: When using it, watermarking must not impact performance or quality; it must be persistent; and it must be resilient.

Two important areas today are deep fake detection and fighting against side channel attacks, attacks that come from things not anticipated by an organization, he said, noting there is not one answer to solve such attacks.

To view the entire presentation, click here.

HITS Spring was presented by IBM Security with sponsorship by Genpact, Irdeto, Tata Consultancy Services, Convergent Risks, Equinix, MicroStrategy, Microsoft Azure, Richey May Technology Solutions, Tamr, Whip Media, Eluvio, 5th Kind, LucidLink, Salesforce, Signiant, Zendesk, EIDR, PacketFabric and the Trusted Partner Network.