Edgescan COO: Traditional Vulnerability Management Continues to Fail

Full stack computer systems and applications continued to be filled with vulnerabilities across the enterprise space in 2019 and traditional vulnerability management continues to get a failing grade, according to Rahim Jina, COO and co-founder of Edgescan.

Edgescan’s 2020 Vulnerability Statistics Report found that 34.78% of all external facing web application vulnerabilities were at high or critical risk in 2019, he said Oct. 20 during the online Media & Entertainment Day event. That was up from 19.2% in 2018.

Meanwhile, 40.35% of all internal web application vulnerabilities were at high or critical risk, he pointed out during the Threat Vectors & Monitoring breakout session “Enemy at the Gates: Why Traditional Vulnerability Management has Failed.”

And 4.79% of all public Internet facing network/host layer vulnerabilities discovered were high or critical risk, he said, adding risk was still in the web/application programming interface/Layer 7 space.

And 4.79% of all public Internet facing network/host layer vulnerabilities discovered were high or critical risk, he said, adding risk was still in the web/application programming interface/Layer 7 space.

When it comes to the “most common vulnerabilities across the full stack,” he said, “cryptography issues are still massive” and “nearly 40 percent of all issues across the full stack are crypto-related” (39.7% to be precise).

“There’s just sheer massive volume of them and that seems to be the case nearly every year, and it seems to be getting worse actually,” he told viewers.

“Cross-site scripting is another massive one, [with] a serious problem there in the web app layer,” he pointed out, noting those vulnerabilities leave the door open for “phishing and all sorts of other types of attacks against end users.” After all, he noted: “Why attack your bank directly when you can attack your end user? It’s a bit of a softer target. They’re also massive and they’re very, very common,” he said of cross-site scripting (XSS), which was No. 2, at 12%.

That was followed by patching-related issues at 8% and exposed data/disclosures allowing attackers to get information at 7.1%, he noted.

Moving on to discuss the clustering of common vulnerability and exposure (CVE), he said: 77.36% of systems had at least one CVE; 67.67% had more than one CVE; and 15.05% had more than 10 CVEs.

That “whopping 15 percent” was often an unpatched or old or legacy system that “hasn’t been given much love,” making them big targets for attackers, he said.

That “whopping 15 percent” was often an unpatched or old or legacy system that “hasn’t been given much love,” making them big targets for attackers, he said.

The oldest CVE found last year was from 1999, he pointed out, noting it was discovered back then and a patch was released for it. There was a huge number found in 2018, the year covered prior to the latest report.

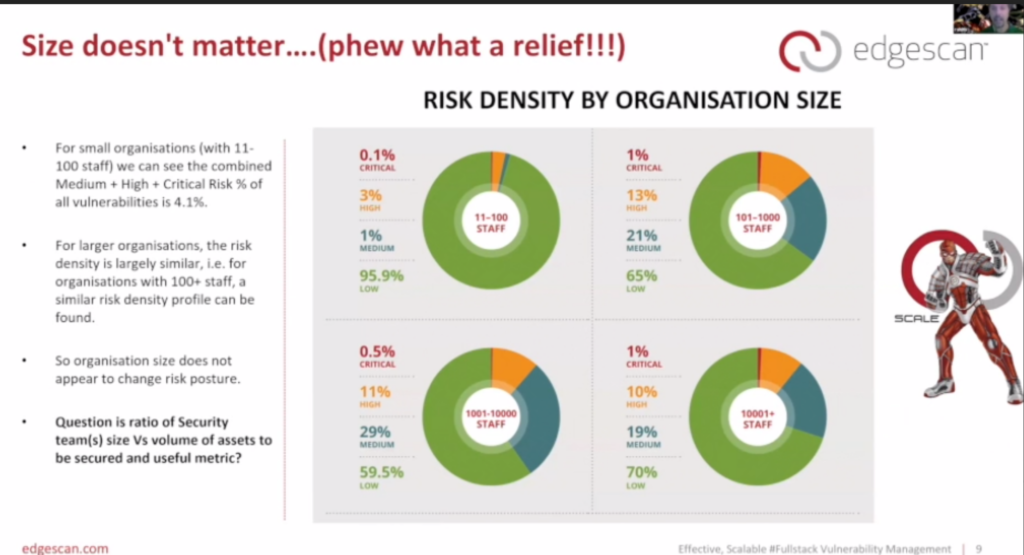

One of the more interesting points was that the size of an organization and its staff/resources did not have a large impact on its vulnerabilities.

There are three types of testing to help organizations keep pace with changes and reduce their vulnerabilities, he said:

- Penetration testing, which provides a deep look at a system and is done manually. Strengths include accuracy. Weaknesses include lack of scalability, cost and it’s not on demand.

- Vulnerability management, including automation/software testing software. Strengths include on demand and scale/volume. Weaknesses include accuracy, coverage, depth, expertise required to validate output and metrics are poor and require multiple tools.

- Hybrid, which uses automation but is augmented with human expertise. It has all the strengths of the other two and none of the weaknesses.

He went on to cite four main reasons why traditional vulnerability management is failing:

- Reliance on software to test software alone is “folly”! Scanners alone don’t work.

- Automation accuracy is not as strong as human accuracy. Attackers are human.

- Scale vs. Depth. Scanners do scale, humans “do” depth. Our enemies do depth every time and are focused.

- “Change is constant.” Consultant-based security does not keep pace with change but our enemies love change. “If code is changing constantly, we need to apply security testing constantly as well.”

In order to “flip that on its side,” vulnerability management should:

- Be available on demand.

- Include “continuous testing” and must be accurate.

- Have integration including a continuous flow of validated vulnerability intelligence.

- The full stack must be addressed.

“Hackers don’t care where they find vulnerabilities,” he pointed out, explaining: “They’ll find it in a system, get in – whether it’s in your application… because you released a change or whether it’s because you forgot to patch.”

The goal is to “obviously move the dial” and “reduce the risk – that’s the aim of the game,” he said.

The goal is to “obviously move the dial” and “reduce the risk – that’s the aim of the game,” he said.

And it is crucial to look at all the systems an organization has because “we can’t secure what we don’t know about,” he noted. Visibility is important but many small and large companies don’t know what they have, he said.

“Don’t let secure application developments leave you sad,” he suggested, noting “it’s all really just software.”

It is important to strive for “prevention by catching” vulnerabilities early and then detection is important before going live, he said, adding: “We need to think of both at the same time.”

Click here to access the full presentation.

M&E Day was sponsored by IBM Security, Microsoft Azure, SHIFT, Akamai, Cartesian, Chesapeake Systems, ContentArmor, Convergent Risks, Deluxe, Digital Nirvana, edgescan, EIDR, PK, Richey May Technology Solutions, STEGA, Synamedia and Signiant and was produced by MESA, in cooperation with NAB Show New York, and in association with the Content Delivery & Security Association (CDSA) and the Hollywood IT Society (HITS).